Processing Engine

⋆˙⟡

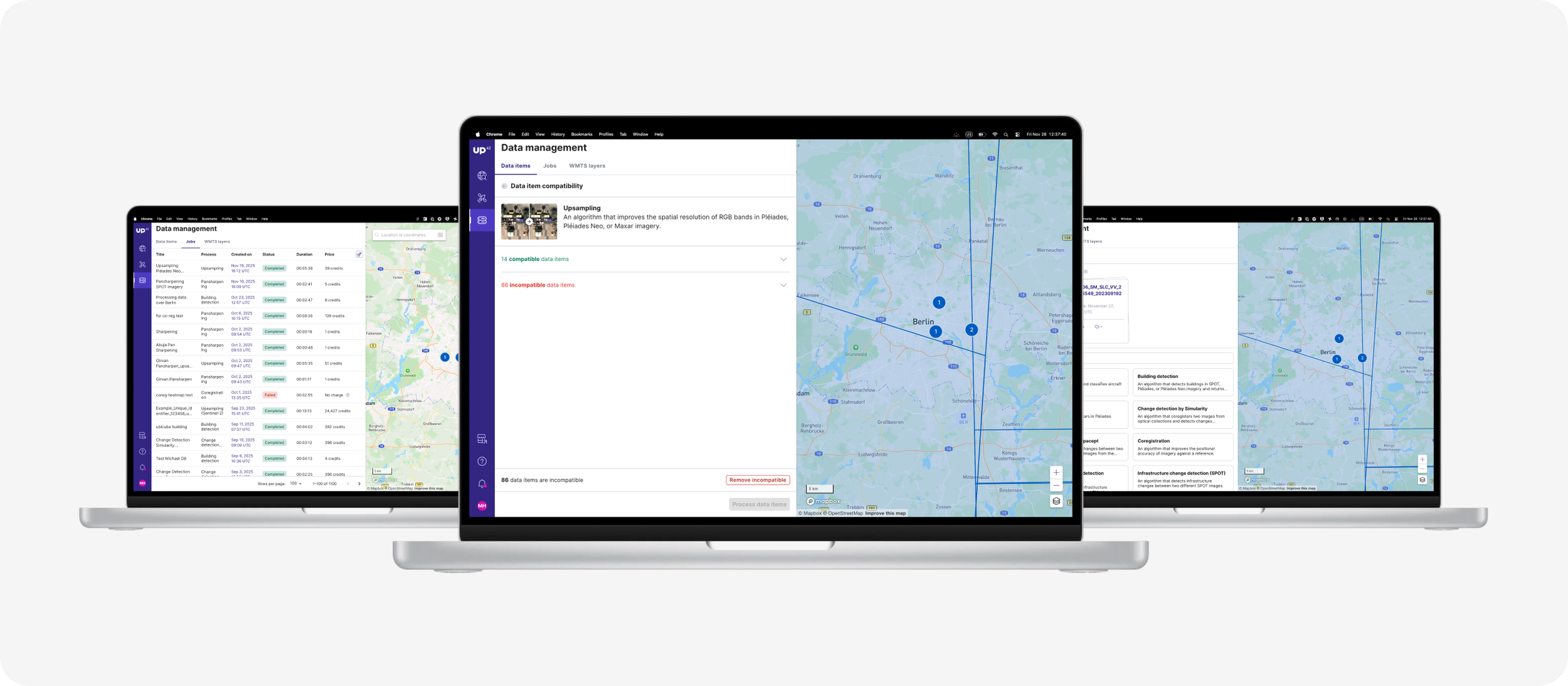

UP42 set out to bring Processing Engine 2.0 directly into the Console, enabling users to run advanced algorithms—such as car detection and NDVI—on their purchased imagery without relying on code or external tooling. The goal was to create an uninterrupted journey from data purchase to processing and insights, while preserving the flexibility that advanced users expect.

UP42 2023

Product Designer & Researcher

TL;DR

We brought Processing Engine 2.0 into the UP42 Console, enabling advanced processing without coding. Over a 3-week sprint, we mapped system flows, designed asset selection, process discovery, and job-management views, and validated early concepts with users. The MVP focused on quick-run defaults and transparent cost/runtime info, while setting a roadmap for advanced controls like AOI clipping and band selection.

Solution

Across a focused 3-week concept sprint, we mapped backend workflows, designed core processing flows in the Console, and validated early prototypes with customers. The result was a streamlined, Console-native processing experience that balanced simplicity for new users with transparency and control for power users.

Research Plan

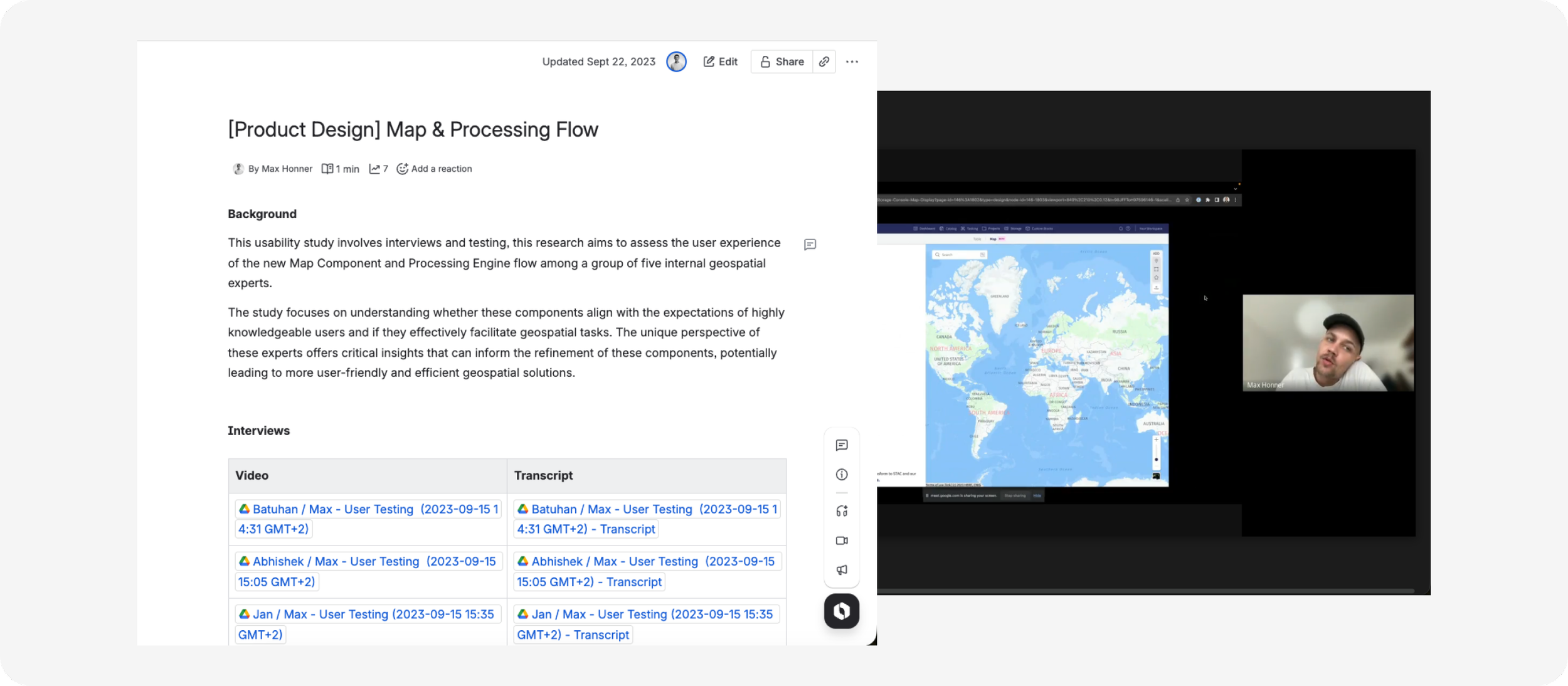

Conducted service blueprinting covering user actions, API events, credit usage, and backend execution (Docker containers, GPU allocation).

Identified key error states such as unsupported spectral bands and insufficient credits.

Clarified the main challenge: simplifying processing for newcomers while still communicating cost, runtime, and compatibility.

Research & Synthesis

Users consistently needed to:

Select single or multiple assets.

Discover relevant algorithms for their imagery.

Run and monitor processing jobs with full clarity on credits and backend implications.

Research surfaced a critical insight: users cared most about what they could run on their data, not low-level technical details. This led us to prioritize algorithm metadata and compatibility indicators throughout the flow.

Design & Validation

Asset Selection: introduced grid/table selection with batch behavior and clear compatibility markers.

Process Discovery: created a filterable catalog of algorithms driven by metadata, showing contextual availability (e.g., certain models only available on 50 cm imagery).

Job Management: added progress tracking, estimated credit costs upfront, and links to SDK documentation for users needing AOI or band-level control.

Tested low-fidelity prototypes with 5 customers, who favored quick-run defaults for the first release over granular settings.

Impact

Short-term (Q3): shipped a complete end-to-end processing flow for full-image analysis directly in the Console.

Long-term: defined a roadmap for advanced AOI clipping, band selection, and previewing outputs before full job execution.

Key Insight: surfacing clear algorithm metadata was essential—users wanted confidence in what’s possible before thinking about how it works.